tl;dr: I wrote a small script to transcribe audio into a text file, all processed locally!

Do you spend a ton of time on YouTube? Me too! It’s an amazing resource to learn all sorts of things, buuuuuut…

- YouTube videos are often full of commercials and sponsorship messages;

- They are not always organized in chapters;

- They are not searchable: I love long form videos but I can’t watch them to find out if a tutorial has what I’m looking for.

What would solve all that? For me, it’s text! I looked around to see if I could find a tool to transcribe videos into text locally. No luck! So I decided to try and make one with Python and a speech-to-text recognition tool by OpenAI called Whisper:

- It’s got a great recognition rate for the English language (and supposedly other languages),

- It runs locally on a relatively modern laptop;

- It’s well documented!

I started out by drafting the general flow of the script. It would go something like this:

- Split a source audio file into chunks using FFmpeg and Pydub, based on relative sound level dips and their duration;

- Process each chunk with Whisper to transcribe it;

- Finally, save the transcription in a text file.

How did that workflow work? Pretty well! Except the audio chunk files in the folder were processed in the wrong order:

| Audio chunk files | The worst processing order ever |

| Chunk1.wav Chunk2.wav Chunk3.wav (…) Chunk10.wav Chunk11.wav Chunk12.wav | Chunk1.wav Chunk10.wav Chunk11.wav Chunk12.wav Chunk2.wav Chunk3.wav (…) |

Changing filename structures didn’t help… So I appended the name of each chunk to a list as it was created. The audio transcription function would then work its way through the list in order rather than the actual folder… and it worked!

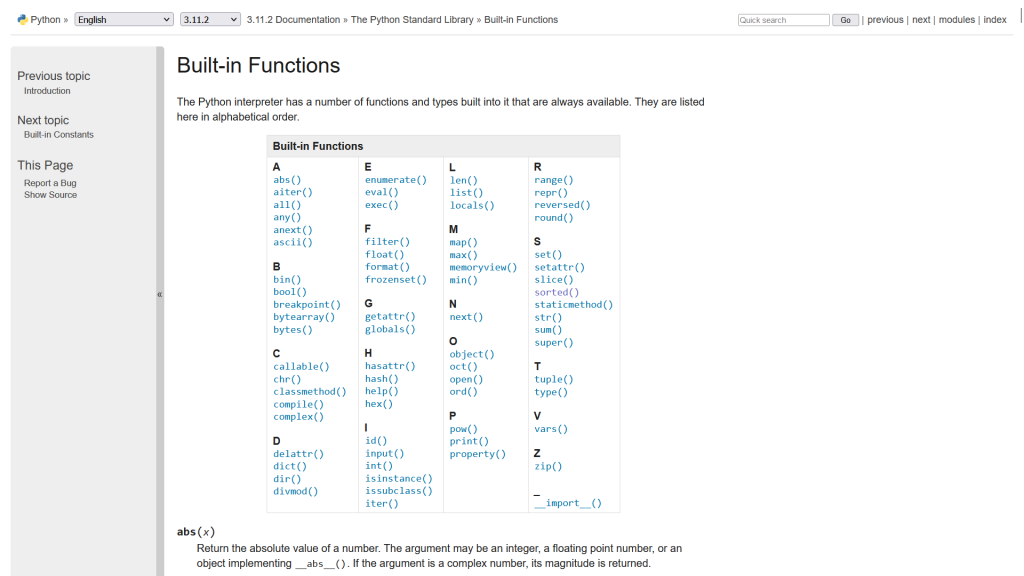

I was also really struggling to pass the source audio file and the target folder to the script…Until I found out about argparse! It let me feed source files and target folders and files as arguments and parameters with argparse’s Parser function. To think it’s one of Python’s built-in modules and I never checked it out before 🙁

So that’s great and all, but is the script fast enough that it’s worth using? There’s little point transcribing a 17 minutes video if it takes an hour…See for yourself, with a small selection of videos I processed on my laptop!

| Title | Running time | Time to transcript |

| I can save you money – Raspberry Pi Alternatives | 15:03 | 2 mins 13 secs |

| Modeling realistic sci-fi helmet with Rachel Frick part 1 | 16:44 | 1min 20 secs |

| Fundamental mentorship concepts | 33:57 | 56 secs |

I don’t know why Linus’ Raspberry Pi alternatives video took longer than everybody else. Is it because you talk a lot, Linus? Anyway, here we are!

If you want to give this script it a go, find it on the repository! You will need to install the following in addition to Python:

- FFMpeg

- The Pydub Python library

- Your Whisper model of choice (I went with the “base” model)

Once you have all your dependencies installed, just run

python "audio to text converter.py" -hThe script will coach you through everything it needs and tell you what it’s up to. That’s it – good luck!